from Twitter https://twitter.com/Rosie_G_

Category: Science

Scientific developments, comments, questions

What dreams may come: Why you’re having more vivid dreams during the Pandemic

An interesting side effect of the coronavirus pandemic is the number of people who say they are having vivid dreams. Many are turning to blogs and social media to describe their experiences.

While such dreams can be confusing or distressing, dreaming is normal and considered helpful in processing our waking situation, which for many people is far from normal at the moment.

While we are sleeping

Adults are recommended to sleep for seven to nine hours to maintain optimal health and well-being.

When we sleep we go through different stages which cycle throughout the night. This includes light and deep sleep and a period known as rapid eye movement (REM) sleep, which features more prominently in the second half of the night. As the name implies, during REM sleep the eyes move rapidly.

Dreams can occur within all sleep stages but REM sleep is considered responsible for highly emotive and visual dreams.

We typically have several REM dream periods a night, yet we do not necessarily remember the experiences and content. Researchers have identified that REM sleep has unique properties that help us regulate our mood, performance and cognitive functioning.

Some say dreams act like a defence mechanism for our mental health, by giving us a simulated opportunity to work through our fears and to rehearse for stressful real-life events.

This global pandemic and associated restrictions may have impacts on how and when we sleep. This has positive effects for some and negative effects for others. Both situations can lead to heightened recollection of dreams.

Disrupted sleep and dreams

During this pandemic, studies from China and the UK show many people are reporting a heightened state of anxiety and are having shorter or more disturbed sleep.

Ruminating about the pandemic, either directly or via the media, just before going to bed can work against our need to relax and get a good night’s sleep. It may also provide fodder for dreams.

When we are sleep deprived, the pressure for REM sleep increases and so at the next sleep opportunity a so-called rebound in REM sleep occurs. During this time dreams are reportedly more vivid and emotional than usual.

More time in bed

Other studies indicate that people may be sleeping more and moving less during the pandemic.

If you’re working and learning from home on flexible schedules without the usual commute it means you avoid the morning rush and don’t need to get up so early. Heightened dream recall has been associated with having a longer sleep as well as waking more naturally from a state of REM sleep.

If you’re at home with other people you have a captive audience and time to exchange dream stories in the morning. The act of sharing dreams reinforces our memory of them. It might also prepare us to remember more on subsequent nights.

This has likely created a spike in dream recall and interest during this time.

The pandemic concerns

Dreaming can help us to cope mentally with our waking situation as well as simply reflect realities and concerns.

In this time of heightened alert and changing social norms, our brains have much more to process during sleep and dreaming. More stressful dream content is to be expected if we feel anxious or stressed in relation to the pandemic, or our working or family situations.

Hence more reports of dreams containing fear, embarrassment, social taboos, occupational stress, grief and loss, unreachable family, as well as more literal dreams around contamination or disease are being recorded.

An increase in unusual or vivid dreams and nightmares is not surprising. Such experiences have been reported before at times associated with sudden change, anxiety or trauma, such as the aftermath of the terrorist attacks in the US in 2001, or natural disasters or war.

Those with an anxiety disorder or experiencing the trauma first-hand are highly likely also to experience changes to dreams.

But such changes are also reported by those witnessing events like the 9/11 attacks second-hand or via the media.

Problems solved in dreams

One theory on dreams is they serve to process the emotional demands of the day, to commit experiences to memory, solve problems, adapt and learn.

This is achieved through the reactivation of particular brain areas during REM sleep and the consolidation of neural connections.

During REM the areas of the brain responsible for emotions, memory, behaviour and vision are reactivated (as opposed to those required for logical thinking, reasoning and movement, which remain in a state of rest).

The activity and connections made during dreaming are considered to be guided by the dreamer’s waking activities, exposures and stressors.

The neural activity has been proposed to synthesise learning and memory. The actual dream experience is more a by-product of this activity, which we assemble into a more logical narrative when the remainder of the brain attempts to catch up and reason with the activity on waking.

An alternate history of the great ideas of science

I have always been fascinated with the question “What if?”. It is such a stimulating, mind-exercising question that it actually helps unravel the mysteries of our own very reality, as to why things may have happened the way they did, and ultimately leading us to understand the wider world as well as our own internal universe that is the Self.

I am in fact planning to write a separate article series on the phenomenon of alternate realities with its scientific background and the philosophical implications and Phillip Ball’s piece in Nautilus is a good opening dialogue for this so I wanted to share it. (Nautil.us site, Dec.15,2016, Illustrations by J.Ferrent). He is right that imagining alternative routes to discovery can help to puncture myths. It is also a fact that sometimes discoveries or breakthrough ideas occur to different individuals more or less simultaneously.

Here goes:

“What would physics look like if Einstein had never existed, or biology without Darwin? In one view, nothing much would change—the discoveries they made and theories they devised would have materialized anyway sooner or later. That’s the odd thing about heroes and heroines of science: They are revered, they get institutions and quantities and even chemical elements named after them, and yet they are also regarded as somewhat expendable and replaceable in the onward march of scientific understanding.

But are they? One way to find out is to ask who, in their absence, would have made the same discovery. This kind of “counterfactual history” is derided by some historians, but there’s more to it than a new parlor game for scientists (although it can be that, too). It allows us to scrutinize and maybe challenge some of the myths that we build around scientific heroes. And it helps us think about the way science works: how ideas arise out of the context of their time and the contingencies and quirks of individual scientists.

For one thing, the most obvious candidate to replace one genius seems to be another  genius. No surprise, maybe, but it makes you wonder whether the much-derided “great man” view of history, which ascribes historical trajectories to the actions and decisions of individuals, might not have some validity in science. You might wonder whether there’s some selection effect here: We overlook lesser-known candidates precisely because they weren’t discoverers, even though they could have been. But it seems entirely possible that, on the contrary, greatness always emerges, if not in one direction then another.

genius. No surprise, maybe, but it makes you wonder whether the much-derided “great man” view of history, which ascribes historical trajectories to the actions and decisions of individuals, might not have some validity in science. You might wonder whether there’s some selection effect here: We overlook lesser-known candidates precisely because they weren’t discoverers, even though they could have been. But it seems entirely possible that, on the contrary, greatness always emerges, if not in one direction then another.

I say “great man” intentionally, because for all but the most recent (1953) of the cases selected here I could see no plausible female candidate. That’s mostly a consequence of the almost total exclusion of women from science at least until the early 20th century; even if we looked for an alternative to Marie Curie, it would probably have to be a man. But the statistics of scientific Nobel Prizes suggests that we’re not doing much better at inclusion even now. This under-use of the intelligence and creativity of half of humankind is idiotic and shameful, and highlighting the shortfall in an exercise like this is another argument for its value.

Heliocentrism – Johannes Kepler

There are few great discoveries for which one can’t find precedents, and heliocentrism—the idea that the Earth revolves around the sun and not vice versa—is no exception. It’s such a pivotal concept in the history of science, displacing humanity from the center of what was then considered the universe, that anticipations have been well documented before the German-Polish astronomer Nicolaus Copernicus outlined the theory in his epochal De revolutionibus orbium coelestium, published as he lay on his deathbed in 1543.

The Greek mathematician Aristarchus of Samos proposed a scheme like this in the third century B.C., for instance, and in the mid-15th century A.D. the German cardinal Nicholas of Cusa asked if there was a definitive center of the universe at all. What made Copernican theory different was that it was based on a mathematical argument that took careful account of the known movements of the planets.

Copernicus nearly didn’t disclose his ideas at all. It was a young Austrian professor named Georg Rheticus who persuaded him to publish the book, and only just in time. So there’s more reason than ever to wonder, had Copernicus died too soon, who else would have reached the same conclusion.

Other 16th-century astronomers, such as the Germans Erasmus Reinhold and Christopher Clavius, had Copernicus’s mathematical turn of mind and observational acumen, but both were ideologically wedded to geocentrism (an Earth-centered universe). The Dane Tycho Brahe, working in Prague, shook things up in the 1570s with a geocentric model in which the sun orbited the Earth but the other planets orbited the sun.

But I think the leap to heliocentrism would not otherwise have happened until the early 17th century. We know that Galileo got into trouble for promoting the Copernican theory a little too aggressively for the taste of the Catholic Church in Rome—and he was iconoclastic and skillful enough to have had the idea himself. But I suspect that Tycho’s protégé and Galileo’s correspondent, the German Johannes Kepler, would have done it first. He had access to Tycho’s excellent observational data, he was mathematically adept, but crucially he also had Copernicus’s dash of (to our eyes) mysticism that saw a sun-centered universe as appropriately harmonious. To dare to put the sun in the middle, you needed not just rational but also aesthetic motives, and that was Kepler all over.

Laws of motion – Christiaan Huygens

It’s easy to get the impression that Isaac Newton was thinking on a different plane from his contemporaries in the late 17th century. While Robert Boyle, one of the most illustrious luminaries of the Royal Society, was a master experimentalist who hesitated to frame hypotheses about his observations, and Newton’s bitter rival Robert Hooke was a genius at instrumentation but tended to confuse a promising thought with a thorough explanation, Newton seemed able to make the abstract leap from close observation to underlying principles. Most famously and impressively, he transformed astronomy from a theory of how the heavenly bodies moved to an account of why they do so: A single law of gravitational attraction was all you needed to explain the shapes of planetary and lunar orbits and the trajectories of comets.

All this was laid out in Newton’s Principia, published in 1687 after being instigated to eclipse Hooke’s reckless claim that he could explain the ellipse-shaped planetary orbits. Before he could deal with planets, Newton had to set down his basic laws of motion. The three that he described in Book I are the foundation of classical mechanics. In short: Bodies maintain their state of uniform motion or rest unless forces act on them; force equals mass times acceleration; and for every action there is an equal and opposite reaction.

They are concise, complete, and simply stated, almost heart-breaking in their elegance. Could anyone else have managed that feat in Newton’s day?

Not at the Royal Society, I think, the ranks of which were filled as much by gentleman dilettantes like Samuel Pepys as by true proto-scientists like Boyle and Hooke. But there was at least one genius among the Society’s many continental correspondents who might have risen to the challenge. Dutchman Christiaan Huygens was polymathic even by the ample standards of his day: a mathematician, astronomer (he made some of the first observations of Saturn’s rings), inventor, and expert in optics and probability. He was especially good at devising clocks and watches, which occasioned a priority dispute with the irascible Hooke. Huygens’ theorems on mechanics in his book on the pendulum clock in 1673 were taken as a model by Newton for the Principia.

Newton’s first law was scarcely his anyway: Known also as the law of inertia (a moving object keeps moving in the absence of a force), it was essentially stated by Galileo, and Huygens embraced it too. The Dutchman’s studies of collisions hover on the brink of stating the third law, while Huygens actually wrote down a version of the second law independently. He had what it took to initiate what we now call Newtonian mechanics.

Special relativity – James Clerk Maxwell

One reason why it is more than just fun to imagine alternative routes to discovery is that it can help to puncture myths. The story of Einstein imagining his way to special relativity in 1905 by conceptually riding on a light beam captures his playful inventiveness but gives us little sense of his real motives. Nor were they, as often suggested, to explain why the experiments of Albert Michelson and Edward Morley in the 1880s had failed to detect the putative light-bearing ether. Einstein was inconsistent about the role of those experiments in his thinking, but it clearly wasn’t a big one.

No, the reason special relativity was needed was that the equations devised by the Scottish scientist James Clerk Maxwell in the 1860s to account for the unity of electric and magnetic phenomena predict the speed of light. Speeds are usually contingent things—the speed of sound, for instance, depends on the medium it travels through. But if the laws of physics, such as Maxwell’s equations of electromagnetism, stay the same regardless of how fast your frame of reference is (steadily) moving, then the speed of light shouldn’t depend on relative motion. Einstein’s theory of special relativity took that as a basic postulate and asked what followed: namely, that space contracts and time slows for objects in motion.

There was already an attempt to square electromagnetic theory with relative motion before Einstein, concocted by the Dutch physicist Hendrik Lorentz. It too invoked contraction of space and time, after a fashion—but did so with a view to preserving the ether. It’s tempting to suppose that Lorentz would have eventually reached the same conclusion as Einstein—that it was better to discard the ether—and so to anticipate that Lorentz would have discovered special relativity in Einstein’s absence. But I am taking the liberty of awarding that realization to a man who was dead in 1905, namely Maxwell himself. He died in 1879 aged just 48, and was very much active until the end. He had the kind of deep intuition in physics that was needed for the remarkable feat of adding electricity to magnetism and producing light. Given two more decades (and the seeds of doubt sown about the ether) I suspect he would have figured it out. You don’t need to take my word for it: Einstein said it himself. “I stand not on the shoulders of Newton,” he said, “but on the shoulders of Maxwell.”

General relativity – Hermann Minkowski

In 1916 Einstein unveiled a new view of gravity, which superseded the theory of Isaac Newton that had reigned for over two centuries. He argued that the force we call gravity arises from the curvature of space and time (the four-dimensional fabric called spacetime) in the presence of mass. This curvature causes the acceleration of bodies in a gravitational field: the steady speeding up of an object falling to Earth from a great height, say. This was the theory of general relativity, which is still the best theory of gravitation that exists today and explains the orbits of the planets, the collapse of stars into black holes, and the expansion of the universe. It is Einstein’s most remarkable and revered work.

Permit me again to bend the rules of the game here—for once more, Einstein might have been beaten to it if another scientist had not died first. That person was Hermann Minkowski, a German mathematician under whom Einstein had studied in Zurich. Much of Minkowski’s work was in pure mathematics, but he also worked on problems related to physics.

In 1908 Minkowski explained that the proper way to understand Einstein’s theory of special relativity—which was all about bodies moving at constant speeds, not accelerating—was in terms of a four-dimensional spacetime. Einstein was at first skeptical, but he later drew on the concept to formulate general relativity.

Minkowski was already alert to the implications, however. Crucially, he saw that whereas the path of an object moving at a constant speed in spacetime is a straight line, that of an accelerating object is curved. In three-dimensional space, the path of the moon orbiting the earth under the influence of their gravitational attraction is more or less circular. But the four-dimensional worldline of the orbiting moon is a kind of helix: It goes round and round in space, but returns to the same position in space at a different time.

There’s more to general relativity than that. It is mass itself, Einstein said, that deforms spacetime into this curving, so-called non-Euclidean (not flat) shape. But the idea of a non-Euclidean spacetime was Minkowski’s, and it’s quite conceivable that he would have fleshed out the idea into a full-blown gravitational theory, perhaps working together with the formidable mathematician David Hilbert at the University of Göttingen, where Minkowski was based starting from 1902.

We do know, from a lecture Minkowski gave at Göttingen in 1907, that he was already thinking about gravitation in the context of relativity and spacetime. But we’ll never know how far he would have taken that, had he not died suddenly at the start of 1909, aged just 44.

Quanta – J. J. Thomson

This must be one of the most incidental, even reluctant, of great discoveries. Heck, German physicist Max Planck did not even want to discover quanta in 1900. He called them a “fortunate guess,” a mere mathematical trick to make his equations comply with what we actually see. Trying to understand how warm bodies emit radiation (think of filament light bulbs, or stars), he proposed that the energy of the vibrating constituent particles be divided into packets or “quanta” with energies proportional to the vibrational frequencies. The resulting solutions of the equations matched experiments, but Planck hesitated to assume that this quantization of energy was a real phenomenon: He advised that the quantum hypothesis be introduced into physics “as conservatively as possible.”

The expert on this “blackbody radiation” was Wilhelm Wien, who won a Nobel Prize for his work. Wien came within a whisker of other important discoveries too: In 1900 he decided that E = 3/4mc2(spot the problem?), and he first saw protons in 1898 but didn’t know what he had discovered. Yet I think Wien was too much a traditionalist to have risked Planck’s quanta.

No—I think that, were it not for Planck’s work on blackbody radiation, quantization of energy would have been deduced from another direction. Because of this quantization, atoms absorb and emit light only at very specific frequencies, for which the light quanta have just the right amount of energy to enable an electron orbiting the atom to jump from one quantized energy state to another. It is because electrons in atoms can only adopt these discrete energy states and not ones in between that atoms are stable at all, the electrons being unable (as classical physics would predict) to gradually lose energy and spiral into the electrically positive nucleus. So as the theory of the internal structure of atoms evolved in the early 20th century, sooner or later quantization would have been an unavoidable inference.

But who would have inferred it? Ernest Rutherford was the expert on atomic structure, but too much the experimentalist and temperamentally resistant to wild speculation. The Dane Niels Bohr was the first to suggest the quantum view of the atom, but only because he had Planck and Einstein’s work on energy quanta to draw on. I can’t help wondering if the English physicist J. J. Thomson, who discovered electrons themselves and under whom both Rutherford and Bohr worked for a time, might have suspected it. He was an expert on atomic theory, the mentor of Charles Glover Barkla who studied quantum jumps in atoms due to X-ray emission, and lived until 1940. He would have been well placed to give quantum theory a less timid origin.

Structure of DNA – Rosalind Franklin

I’d love to think that Rosalind Franklin, the English crystallographer whose data were central to the discovery of the double-helical structure of DNA, would have figured it out if James Watson and Francis Crick hadn’t done so first in 1953. It was famously only when Watson saw the pattern of X-rays scattered from DNA and recorded by Franklin and student Raymond Gosling that he became convinced about the double helix. He was shown these data by Maurice Wilkins, with whom Franklin had a prickly working relationship at King’s College London—and Wilkins did not have Franklin’s permission for that, although any impropriety has been overplayed in accounts that make Franklin the wronged heroine. In any event, those data triggered Watson and Crick’s deduction that DNA is a helix of two strands zipped together via weak chemical bonds between the gene-encoding bases spaced regularly along the backbones.

The urge to award the “alternative discovery” to Franklin is heightened by the ungallant, indeed frankly misogynistic, treatment she got from Watson in his racy but somewhat unreliable 1968 memoir of the discovery, The Double Helix. Watson is rightly haunted now by his chauvinistic attitude.

I was worried, though, that Franklin—cautious, careful and conservative by instinct in contrast to the brilliant, intuitive Crick and the brash young Watson—wouldn’t have stuck her neck out on the basis of what by today’s standards is rather flimsy evidence. She knew that a female scientist in those days couldn’t afford to make mistakes.

So I was delighted when Matthew Cobb, a zoologist at the University of Manchester who delved deeply into the DNA story for his 2015 book Life’s Greatest Secret, confidently told me that, yes, Franklin would have done it. “The progress she made on her own, increasingly isolated and without the benefit of anyone to exchange ideas with, was simply remarkable,” Cobb wrote in The Guardian. Just weeks before Watson and Crick invited Franklin and Wilkins to see their model of DNA in March 1953, Franklin’s notebooks—studied in detail by British biochemist Aaron Klug, who won a Nobel Prize for his own work on DNA—show that she had realized DNA has a double-helix structure and that the two strands have complementary chemical structures, enabling one to act as a template for replication of the other in the way Watson and Crick famously alluded to in their discovery paper in Nature that April.

“Crick and I have discussed this several times,” wrote Klug in the Journal of Molecular Biology. “We agree she would have solved the structure, but the results would have come out gradually, not as a thunderbolt, in a short paper in Nature.” At any rate, her contributions to the discovery are undeniable. “It is clear that, had Franklin lived, the Nobel Prize committee ought to have awarded her a Nobel Prize, too,” writes Cobb.

The other contender for the discovery is American chemist Linus Pauling, who was the Cambridge duo’s most feared rival. Pauling had impetuously proposed a triple-helical structure of DNA in early 1953 with the backbones on the inside and the bases facing out. It made no chemical sense, as Watson and Crick quickly appreciated to their great relief. Unperturbed by such gaffes, Pauling would have bounced back. But he didn’t have Franklin’s X-ray data. “Pauling was a man with great insight, but not a magician who could manage without data,” wrote Klug.

Natural selection – ?

Sometimes discoveries or breakthrough ideas occur to different individuals more or less simultaneously. It happened with calculus (Leibniz and Newton), with the chemical element oxygen (Scheele, Priestley, and Lavoisier), and most famously, with evolution by natural selection, announced in 1858 by Charles Darwin and Alfred Russel Wallace.

It’s tempting to suppose that at such moments there was “something in the air”: that the time for discovery was ripe and it would inevitably have occurred to someone sooner or later. If that’s so, it shouldn’t be too hard to identify other candidate discoverers. If we expunge both Darwin and Wallace from the picture, who could fill their shoes?

Well, here’s the thing. Darwin had plenty of advocates after he published On the Origin of Species, but I struggle to see how any of them would have deduced the theory by themselves. As for Wallace, his evolutionary theory was not at all identical to Darwin’s anyway, despite what Darwin himself said: as his biographers Adrian Desmond and James Moore say, in part he “read his own thought” into Wallace’s account.

I wondered if my floundering for candidates just betrayed my own ignorance or lack of imagination. So I asked historian and philosopher of science James Lennox of the University of Pittsburgh, an expert on the history of Darwinian theory, who might have done the job in place of Darwin and Wallace. His answer was striking: The story might not have gone that way at all.

“When you read through Darwin’s Species Notebooks and see the struggle he went through, and then you compare his first and second attempts to present it coherently (in 1842 and 1844) with the Origin, I think it is equally plausible that some very different theory of evolution might have won the day,” says Lennox. After all, alternatives to Darwinian thought were still debated in the late 19th and early 20th centuries, when, Lennox says, “a variety of non-Darwinian theories were at least as popular as Darwin’s.” Some prominent geneticists, such as the Dutchman Hugo de Vries, supposed that evolutionary changes happens in jumps (saltation) rather than by Darwin’s gradual change—and as far as the “macroevolution” of whole groups of species goes, that idea never went away: It’s comparable to the “punctuated equilibrium” model of modern-day biologists Stephen Jay Gould and Niles Eldredge.

But surely Darwinian natural selection is the “right” theory, so we’d have got there eventually? Well yes—but it’s still debated whether the disturbingly tautological “survival of the fittest” is the best way to think about it. Darwin’s view is now augmented and adapted, for example to include the effects of random genetic drift and to unify a view of evolutionary change with accounts of developmental biology (so called “evo-devo”). Might we have got to the current position, then, without an origin-of-species-type account at all? “I think that is entirely possible,” says Lennox.

That tells us something about this entire counterfactual enterprise. Science provides us with theories that are objectively useful in explaining and predicting what we see in the world. But that doesn’t deny the fact that specific theories generally have a particular style in terms of what they express or stress, or of what metaphors they use: Darwinism has no need of the “selfish gene,” for example. Similarly, in an entirely different field, quantum electrodynamics could have been constructed without using the celebrated language of Feynman diagrams devised by Richard Feynman. It seems entirely possible that the way we conceptualize the world bears the imprint of those who first proposed the concepts. Scientists may be less replaceable than we think.”

Why Do Autumn Leaves Change Color?

Scientific American editor Mark Fischetti explains how the leaves of deciduous trees perform their annual chameleon act, changing from various shades of green to hues of bronze, orange and brilliant red.

I loved watching and learning, so I wanted to share this:

Scientists Claim to Solve Riddle of Life’s Chemical Origins

The question of how life got its start on Earth is a tricky one. Life as we know it needs a genetic mechanism like DNA or RNA to record blueprints for proteins, but proteins are required for the replication of DNA in the first place — and none of that is likely to happen without a cell membrane made of lipids to keep unwanted chemicals out. Biologists have argued for years over which of these three systems might have emerged first, but new research suggests a radical solution to the chicken-and-the-egg conundrum: All three developed at once.

John Sutherland and his team at the University of Cambridge show in a paper published this week in Nature Chemistry that all the elements and energy to create the three systems would have been present in the primordial soup of early Earth. The research shows how the common compounds hydrogen sulfide and hydrogen cyanide, in a bath of UV light and water, could morph into dozens of nucleic acids and lipids. With all the building blocks formed simultaneously, the rest of the process was free to take place.

Perhaps the one prominent theory discounted by Sutherland’s work is exogenesis, the idea that life may have been brought to Earth by a meteor or other celestial object. But even that gets a nod: Meteoric bombardment would have helped supply hydrogen cyanide, iron and other essential elements.

Books worth reading, as recommended by Bill Gates, Susan Cain and more…

Trees can talk to and help each other using an internet of fungus!

Trees can talk to and help each other using an internet of fungi!

Film fans might be reminded of James Cameron’s 2009 blockbuster Avatar. On the forest moon where the movie takes place, all the organisms are connected. They can communicate and collectively manage resources, thanks to “some kind of electrochemical communication between the roots of trees”. Back in the real world, it seems there is some truth to this.

In a recent BBC article, I read about mycelia, fungal formations that help communicate between plants sharing common soil.

Read on here, and you will be amazed:

http://www.bbc.com/earth/story/20141111-plants-have-a-hidden-internet

It’s not like we are connected in a new-agey oohm-y way, but science backs up the ınterconnectivity among living beings. And for me, that is great enough.

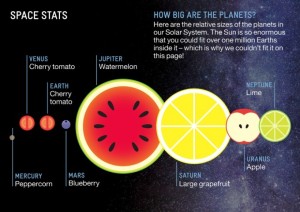

Relative Scale of the Planets, in Fruit 😉

Scientists make enzyme that could help explain origins of life

I recently read an article about the developments in our understanding of how life emerged. The related research was published Nature magazine.

Mimicking natural evolution in a test tube, scientists at The Scripps Research Institute (TSRI) have devised an enzyme with a unique property that might have been crucial to the origin of life on Earth.

Aside from illuminating one possible path for life’s beginnings, the achievement is likely to yield a powerful tool for evolving new and useful molecules.

We are one more step closer to unveiling a mystery…. Nice!

Scientists use stem cells to regenerate human corneas

One of our less evolved organs, the EYE, is getting some nudge from the scientific community, thanks to stem cell research. http://t.co/YJK6NsVhi1

According to a BBC report researchers have been able to hunt down elusive cells in the eye, capable of ‘regeneration’ and ‘repair’. They transplanted these regenerative stem cells into mice – creating fully functioning corneas.

According to the article in the journal Nature, they say this method may one day help restore the sight of victims of burns and chemical injuries.

In the USA patients with eye disease were injected with stem cells and both apparently showed some slight improvement in vision.

And in the UK London’s Moorfields Eye Hospital is conducting the UK leg of trials involving human embryonic stem cells. http://www.bbc.com/news/health-16710538

I am glad that one of our less evolved organs, the eye, is getting some nudge from the scientific community, thanks to stem cell research.